There are a lot of great posts about what a product manager is (like here and here), but there is only one problem: none of them say the same thing, because there is no globally accepted definition of the role. In some organizations, a product manager keeps the trains running on time, in others the product manager is “CEO of the product”, and in others the product manager is the voice of the customer.

One thing that most product managers (and organizations that employ product managers) agree on is that data beats opinion, so I decided to put together a highly scientific test to figure out what a product manager really is, as defined by the “customer” that is buying product managers. In other words, I grabbed a couple dozen product manager job postings from LinkedIn, selected 9 high-quality postings (well written, specific requirements, clear scope), and then identified common features present in posting. This experiment was far from perfect, but I did see some patterns that I found interesting.

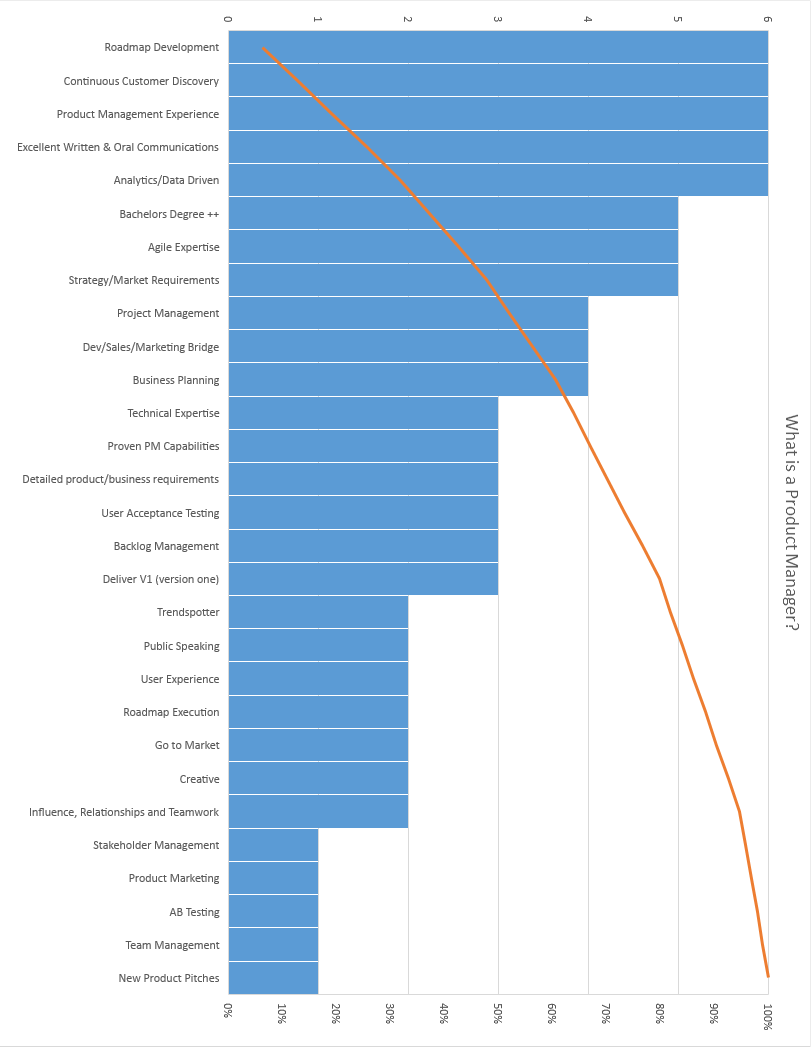

So without further ado, here is a visualization of the data:

The most interesting point to me: no job feature was required by more than 2/3 of these product management jobs. Other interesting points:

- Most companies want experience managing the product roadmap, from “Define, develop and communicate the product roadmap” to “You have experience in creating product roadmaps that are focused on achieving business goals” to “Own the roadmap, influence product and technology strategy and direction (roadmap).” Fun fact: I bet no 2 companies agree on what a good product roadmap is:)

- Product management experience is typically expected, and 5 years experience seems to be a sweet spot.

- Continuous customer discovery / voice of the customer was common, but not universally explicitly required, which I find crazy. If I was to pick one attribute that a solid product manager needs, it is an obsession with the customer. Product management experience you can acquire (everybody starts somewhere), and skills like building product roadmaps can be learned, but a lack of focus on the customer seems like it should be a non-starter.

One job feature that I found interesting in its absence was DevOps. I have a hunch that as customer / product feedback loops get tighter, the product manager is going to need to get closer to the DevOps process. Knowing how to get an experiment/feature into the wild and ready to gather data on whether it is working, and gathering this data as quickly as possible (and either double down or revise) seems like a natural experience to demand of your product manager. I am not saying that product managers will drive DevOps, but they should be familiar with it…where a feature is in the release process, when it is live (and for whom), etc. But, alas, I am on the outside here, and not one job description mentioned anything about DevOps. Five of the postings did mention Agile, which in combination with DevOps, form the foundation of the feedback loops, so perhaps it is just assumed some familiarity with how features get operationalized and released to the wild is part of the product manager’s bag of tricks.

Let me know if anything jumps out at you, or if you have opinions that diverge from this highly scientific study. 🙂

Thanks.

Matt

Ps-here is the text associated with the graphic above:

| Roadmap Development |

6 |

| Continuous Customer Discovery |

6 |

| Product Management Experience |

6 |

| Excellent Written & Oral Communications |

6 |

| Analytics/Data Driven |

6 |

| Bachelors Degree ++ |

5 |

| Agile Expertise |

5 |

| Strategy/Market Requirements |

5 |

| Project Management |

4 |

| Dev/Sales/Marketing Bridge |

4 |

| Business Planning |

4 |

| Technical Expertise |

3 |

| Proven PM Capabilities |

3 |

| Detailed product/business requirements |

3 |

| User Acceptance Testing |

3 |

| Backlog Management |

3 |

| Deliver V1 (version one) |

3 |

| Trendspotter |

2 |

| Public Speaking |

2 |

| User Experience |

2 |

| Roadmap Execution |

2 |

| Go to Market |

2 |

| Creative |

2 |

| Influence, Relationships and Teamwork |

2 |

| Stakeholder Management |

1 |

| Product Marketing |

1 |

| AB Testing |

1 |

| Team Management |

1 |

| New Product Pitches |

1 |